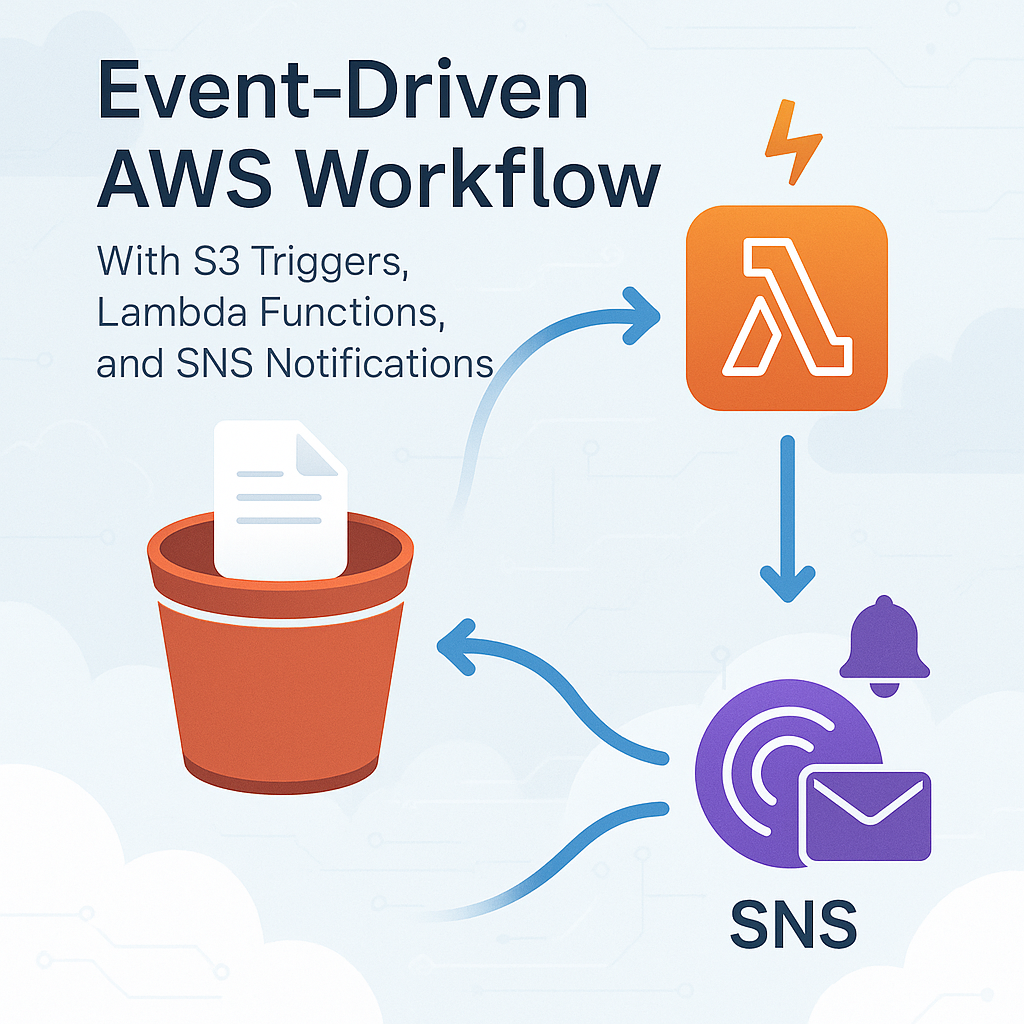

Automate real-time notifications with a flexible serverless workflow

Introduction

Event-driven architectures allow cloud systems to react to changes with minimal delay. Among AWS’s serverless tools, combining S3, Lambda, and SNS is a powerful and widely used pattern for building scalable, automated workflows.

In this blog, I’ll walk you through setting up this workflow and share a globally relatable use case that engineers across industries might face.

NOTE: In my earlier blogs, I have covered S3 → SNS → Fan-out patterns. This blog focuses on adding Lambda as an intermediate processor for better flexibility and control before forwarding notifications.

A Simple, Relatable Use Case

Across various industries, one common need is to notify teams immediately when a new file is uploaded — be it images, reports, or configuration files.

Example Scenario:

Imagine a media company where multiple photographers upload images to an S3 bucket after an event shoot. The editing team needs to be notified instantly whenever new photos arrive so they can start working without delay.

To automate this:

- The S3 bucket receives the uploaded images.

- A Lambda function checks the file type and ensures it matches expected formats (like

.jpgor.png). - The Lambda then publishes a message to an SNS topic, notifying the editing team via email and Slack that “New event photos have been uploaded.”

This eliminates the need for manual monitoring of the S3 bucket, reduces delays, and ensures that the team is ready to take action as soon as content is available.

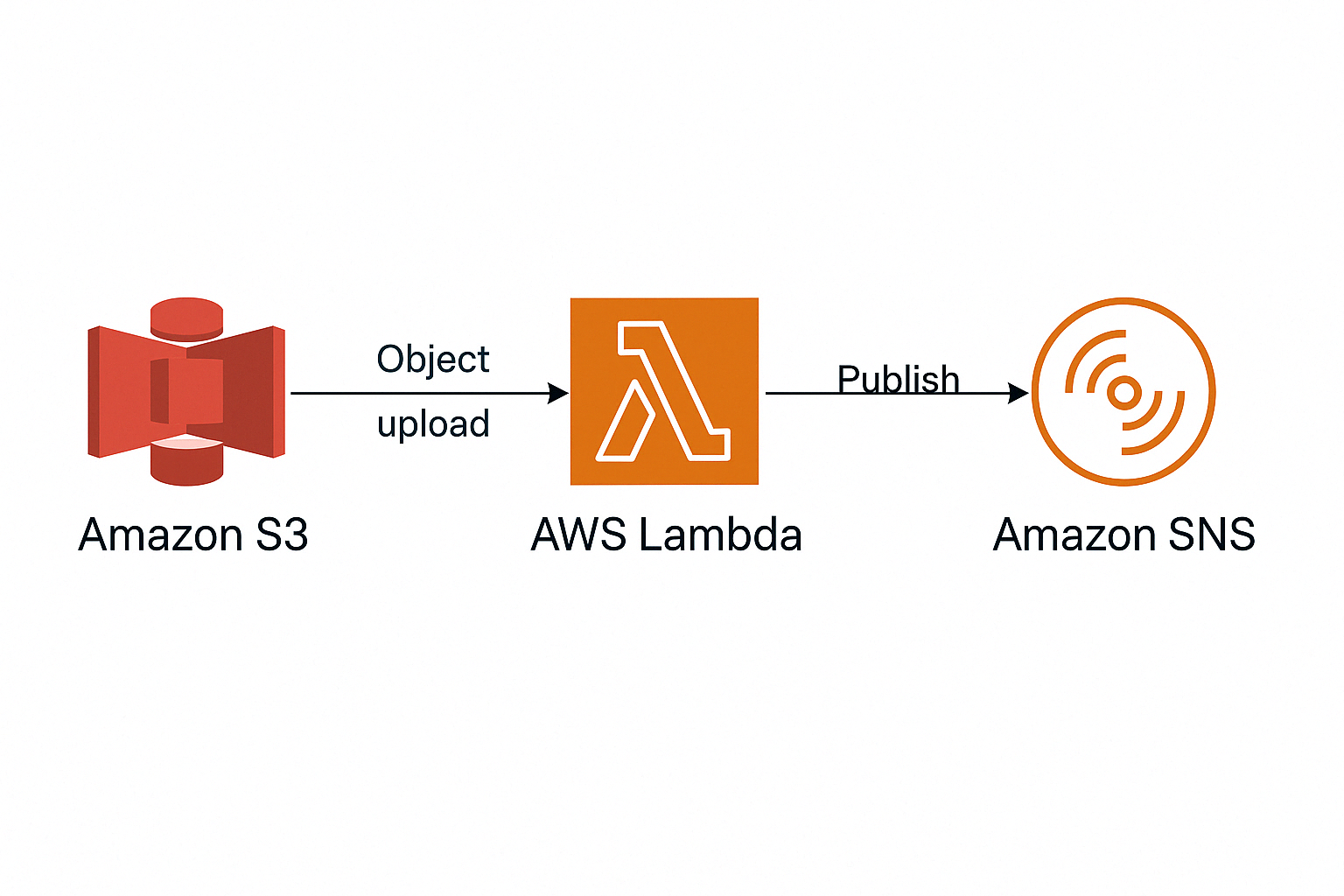

Architecture Overview

The architecture follows this sequence:

Amazon S3 -> AWS Lambda -> Amazon SNS

Step-by-Step Setup

1. S3 Bucket Configuration

- Enable event notifications on

s3:ObjectCreated:*. - Set Lambda as the target.

2. SNS Topic Creation

- Create the SNS topic.

- Add subscribers like email, SMS, or SQS.

- Configure the topic policy to allow Lambda to publish.

3. Lambda Function Creation

- Read the event triggered by S3.

- Validate the file and extract metadata.

- Publish a formatted message to the SNS topic.

NOTE: I have already explained similar configurations (especially around S3 event notifications and SNS fan-out patterns) in my previous blogs.

Do check them out for detailed reference and additional use cases:

IAM & Permissions

- Lambda Execution Role:

– Needss3:GetObjectto read from the bucket.

– Needssns:Publishto send messages to SNS. - S3 Permission:

– Allow S3 to invoke the Lambda function. - SNS Permission:

– SNS should allow publishing from Lambda’s role.

Terraform Script for Infrastructure Setup

Here’s a Terraform configuration that provisions the entire setup:

provider "aws" {

region = "us-east-1"

}

resource "aws_s3_bucket" "invoices" {

bucket = "global-invoice-bucket-demo"

}

resource "aws_sns_topic" "notify_finance" {

name = "invoice-upload-topic"

}

resource "aws_iam_role" "lambda_exec" {

name = "lambda_s3_exec_role"

assume_role_policy = jsonencode({

Version = "2012-10-17",

Statement = [{

Effect = "Allow",

Principal = { Service = "lambda.amazonaws.com" },

Action = "sts:AssumeRole"

}]

})

}

resource "aws_iam_policy_attachment" "lambda_basic" {

name = "lambda-basic"

roles = [aws_iam_role.lambda_exec.name]

policy_arn = "arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole"

}

data "archive_file" "lambda" {

type = "zip"

source_file = "lambda_function.py"

output_path = "lambda_function_payload.zip"

}

resource "aws_lambda_function" "process_upload" {

function_name = "processInvoiceUpload"

role = aws_iam_role.lambda_exec.arn

runtime = "python3.9"

handler = "lambda_function.lambda_handler"

filename = "lambda_function_payload.zip"

source_code_hash = data.archive_file.lambda.output_base64sha256

environment {

variables = {

SNS_TOPIC_ARN = aws_sns_topic.notify_finance.arn

}

}

}

resource "aws_lambda_permission" "allow_s3" {

statement_id = "AllowExecutionFromS3"

action = "lambda:InvokeFunction"

function_name = aws_lambda_function.process_upload.function_name

principal = "s3.amazonaws.com"

source_arn = aws_s3_bucket.invoices.arn

}

resource "aws_s3_bucket_notification" "bucket_notification" {

bucket = aws_s3_bucket.invoices.id

lambda_function {

lambda_function_arn = aws_lambda_function.process_upload.arn

events = ["s3:ObjectCreated:*"]

}

}

Lambda Function Python Code

def lambda_handler(event, context):

s3_event = event['Records'][0]['s3']

bucket = s3_event['bucket']['name']

key = s3_event['object']['key']

message = f"New file uploaded: {key} in bucket: {bucket}"

sns = boto3.client('sns')

response = sns.publish(

TopicArn='arn:aws:sns:us-east-1:123456789012:report-notification-topic',

Message=message,

Subject='S3 Upload Notification'

)

return {

'statusCode': 200,

'body': json.dumps('SNS message sent!')

}

Testing the Flow

- Upload a test file into the S3 bucket.

- Check Lambda execution logs via CloudWatch.

- Confirm the message received by your SNS subscribers (e.g., email or SQS queue).

Best Practices

- Always apply least-privilege IAM policies

- Use environment variables to store topic ARN and bucket names

- Enable CloudWatch logging for monitoring Lambda

- Consider adding DLQ (Dead Letter Queue) for Lambda failures

- Encrypt the S3 bucket and SNS topic if handling sensitive data.

Previous blogs

References:

Terraform Configuration Reference:

https://github.com/kbrepository/terraform-aws-experiments-kb/tree/main/S3-Event-Lambda-Sns

Conclusion

Whether it’s invoices, log files, or other data uploads, the S3 → Lambda → SNS pattern provides a robust and scalable way to automate workflows across cloud environments.

It is flexible enough to handle validations, enrichments, and conditional logic before notifying downstream systems, making it a perfect fit for many real-world scenarios.

If you’re already familiar with S3 → SNS → Fan-out, adding Lambda in the middle brings a new intelligence layer to your pipeline.